Soyou’re embarking on your journey into data science and everyone recommends that you start with learning how to code. You decided on Python and are now paralyzed by the large piles of learning resources that are at your disposal. Perhaps you are overwhelmed and owing to analysis paralysis, you are procrastinating your first steps in learning how to code in Python.

In this article, I’ll be your guide and take you on a journey of exploring the essential bare minimal knowledge that you need in order to master Python for getting started in data science. I will assume that you have no prior coding experience or that you may come from a non-technical background. However, if you are coming from a technical or computer science background and have knowledge of a prior programming language and would like to transition to Python, you can use this article as a high-level overview to get acquainted with the gist of the Python language. Either way, it is the aim of this article to navigate you through the landscape of the Python language at their intersection with data science, which will help you get started in no time.

1. Why Python?

The first (and famous) question that you may have is:

What programming language should you learn?

After doing some research on the internet you may have decided on Python owing to the following reasons:

- Python is an interpreted, high-level programming language that is readable and easy to understand syntax

- Python has ample learning resources available for Python

- Python has high versatility and multiple use cases according to Python Developers Survey 2020 conducted by Python Software Foundation and JetBrains

- Python has a vast collection of libraries for performing various operations and tasks

- Python is a popular language according to the TIOBE index and Stack Overflow Developer Survey

- Python is a highly sought after skills by employers

2. Use Cases for Python

Another question that you may have is:

How exactly will Python help your data science projects?

To answer this question, let’s consider the data life cycle as shown below. In essence there are 5 major steps consisting of data collection, data cleaning, exploratory data analysis, model building and model deployment. All of these steps can be implemented in Python and once coded, the resulting code is reusable and can therefore be repurposed for other related projects.

Aside from data analytics, data science and data engineering, the great versatility of Python allows it to be applied for endless possibilities including automation, robotics, web development, web scraping, game development and software development. Moreover, Python is being used in practically every domain that we can think of, including but not limited to Aerospace, Banking, Business, Consulting, Healthcare, Insurance, Retail and Information Technology (see the Data Sheet made by ActiveState on Top 10 Python Use Cases).

3. Mindsets for Learning Python

3.1. Why are you Learning Python?

Figuring out why you’re learning Python may help you stay motivated when life’s getting in the way. Sure, having consistency and good habits may only get you so far. Having a clear reason for learning may help to boost your motivation and steer you back on track.

3.2. Don’t be Afraid to Fail or Make Mistakes

A part of learning any new technical skills is to take a dive in approach to learning. Don’t be afraid of failing or getting stuck as these are inevitable. Remember that if you’re not stuck, you’re not learning! I have always liked to approach learning new things by making as much mistakes as possible and the plus side is the valuable lessons learned that can be used for tackling it the second time around.

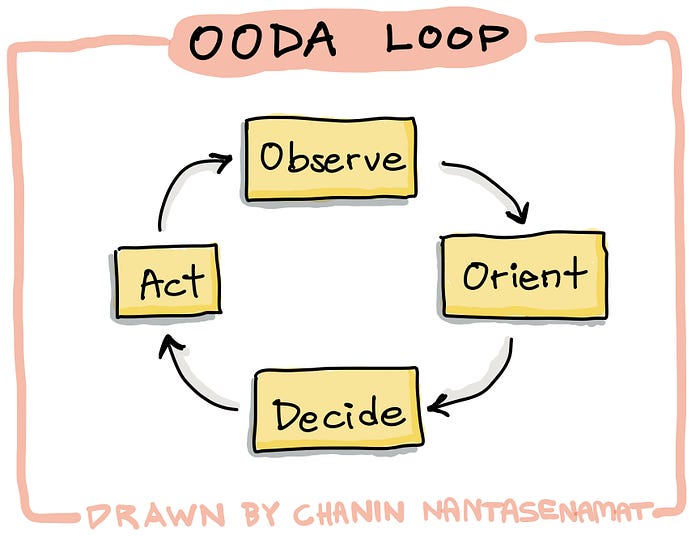

In fact, I have learned about the OODA loop from one of Daniel Bourke’s blog that the faster we can iterate through this loop, the better we will be in attaining intended goals.

The OODA loops stands for Observe, Orient, Decide and Act, which was originally a military strategy designed by US Air Force Colonel John Boyd for use in combat. The key is speed and to achieve that speed, the iteration through the loop must proceed faster. Thus, when applied in implementing coding projects, the faster we can iterate through the OODA loop the more we will learn.

3.3. Learning How to Learn

There are ample learning resources available for learning Python. Depending on the approach that resonates with you, select those that maximizes your learning potential.

3.3.1. Reading

If reading is your thing, there are several great books and written tutorials that you can learn Python from.

→ Python Basics: A Practical Introduction to Python 3

→ Automate the Boring Stuff with Python: Practical Programming for Total Beginners

→ Python Crash Course: A Hands-On, Project-Based Introduction to Programming

Some good books using Python in the context of data science and machine learning are as follows:

→ Python Data Science Handbook: Essential Tools for Working with Data

→ Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython

→ Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

3.3.2. Visual

Perhaps you are more of a visual person then there’s a lot of great YouTube channels out there that teaches the concepts as well as practical tutorials. These includes: freeCodeCamp, Data Professor, Coding Professor, CD Dojo, Corey Schafer, Tech with Tim, Python Programmer, Data School, Keith Galli, Kylie Ying and Programming with Mosh.

3.3.3. Projects

Nothing beats learning by doing. It is also the greatest way to push your learning to the limits. The large collection of datasets available on Kaggle is a great starting point to get inspiration for starting your own projects. Guided Projects by Coursera is also another way to implement projects under the guidance of a course mentor. If you’re into live training, Data Science Dojo has an introductory Python for Data Science program that can be completed in a week’s time.

3.4. Shift from Following Coding Tutorials to Implementing your own Projects

Being able to successfully follow tutorials and being able to implement your own projects from scratch are two different things. Sure, for the former you can successfully follow each steps of the tutorial but when it comes the time that you have to figure out for your own which approach to use or which libraries/functions to use, you may succumb to the challenge and get stuck.

So how exactly can you make the transition from being a follower of coding tutorials to actually being able to implement your own projects? Find out in the next section.

3.5. Learning to Solve Problems

The answer is quite simple. You have to start doing projects. The more, the merrier. As you accumulate experience from many projects, you’re going to acquire skills pertaining to problem solving and problem framing.

To get started, follow these process:

- Select an interesting problem to work on.

- Understand the problem.

- Break down the problem to the smallest parts.

- Implement the small parts. Next, piece together the results of these parts and holistically see if they addressed the problem.

- Rinse and repeat.

You may also find the project to be more engaging if you’re passionate about the topic or if it piques your interest. Look around you, what topics are you interested in and think about what you would like to know more about. For example, if you are a YouTube content creator, you may find it interesting to analyze your content in relation to its performance (e.g. views, hours watched, watch time, video clickthrough rate, etc.).

3.6. Don’t Reinvent the Wheel

You should become familiar with using Python functions to perform various tasks. In a nutshell, the thousands of Python libraries that are available on PyPI, conda or GitHub comes pre-equipped with a wide-range of functions that you can use right out of the box.

The essence of these functions is that it normally takes in input arguments for which it uses to perform a pre-defined task or sets of tasks before returning the output. Input arguments can be explicitly specified when running the function but if none are specified then Python assumes that you’re using a set of default parameters.

Before embarking on writing your own custom function, do some Googling to see if there are already some existing functions from libraries that performs similar functionalities as to what you plan on implementing. Chances are there may already be an existing function for which you just need to simply import and use.

3.7. Learning New Libraries

It should be noted that if you’re familiar with one library you’re also likely to be able to pick up other related libraries. For example, if you’re already familiar with using matplotlib, using seaborn or plotly should be fairly easy to learn and implement because the foundations are already in place. Similar with the deep learning libraries (e.g. tensorflow, torch, fastai and mxnet).

3.8. Debugging and Asking for Help

As you code your projects and you’re code throws out errors, it is therefore essential that you learn how to debug the code and problem solve. However, if the error goes beyond your comprehension, it is very important that you know how to ask for help.

The first person that you should ask is yourself. Yes, I know you may be wondering how would that work since you’re clueless right now. By asking yourself, I mean that you first try to solve the problem yourself before you ask others for help. This is important because it goes to show your character and your persistence.

So how exactly can you debug the code and problem solve.

- Read the output carefully as the reason for the error is explicitly written in the output. Sometimes even the simplest error may have slipped our mind such as forgetting to install some libraries or forgetting to first defining the variables before using it.

- If you are still clueless, now it’s time to start Googling. Thus, you will now have to learn how to master the Art of Asking the Right Questions. If you’re asking Google using irrelevant keywords, you may not find any useful answers in the search results. The easiest way is to include keywords related to the problem.

In the search query, I would use the following as keywords

<name of Python or R library> <copy and paste the error message>Yes, that’s basically it. But let’s say I would like to limit my search from only Stack Overflow I would add site:stackoverflow.com as an additional statement in the search query. Or alternatively, you can head over to Stack Overflow and perform the search there.

3.9 Consistency and Accountability

A recurring theme in any learning journey is to develop a habit of learning, which will help you to become consistent in your learning journey. Coding consistently will put your momentum in action and the more you use it the more skillful you’ll become. As the saying goes:

Use it or lose it.

The 66 Days of Data initiative started by Ken Jee is a great way to build good habits of learning, maintain consistency and gain accountability for your learning. Taking part in this initiative is quite easy:

4. Python Coding Environment

4.1. Integrated Development Environment

Integrated Development Environment (IDE) can be thought of as the work space that will house your code and not only that, it also provides additional amenities and convenience that can augment your coding process.

Basic features of an IDE include syntax highlighting, code folding and bracket matching, path awareness of files in the project as well as the ability to run selected code blocks or the entire file. More advanced features might include code suggestions/completion, debugging tool as well as support for version control.

Popular IDEs for Python includes:

- VS Code — A powerful and highly customizable IDE.

- PyCharm — Another powerful IDE but may require a paid subscription to unlock all features available in the Pro version, other than that the community version offers a good standard IDE.

- Spyder — R and MATLAB users will find this to have a RStudio/MATLAB vibe.

- Atom — Sports a beginner friendly interface that is highly customizable.

4.2. Jupyter Notebook

Jupyter notebook can be installed locally to any operating system of your choice (i.e. be it Windows, Linux or Mac OSX) via pip or conda. Other flavors of Jupyter is the Jupyterlab that also provides a workspace and IDE-like environment for larger and more complex projects.

Cloud deviants of the Jupyter notebook have been a blessing to all aspiring and practicing data scientists as it enables everyone access to powerful computational resources (i.e. both CPU and GPU computing).

I conducted a survey in the community section of my YouTube channel (Data Professor) to see which of the cloud-based Jupyter notebooks were most popular amongst the community.

As can be seen above, the five popular Jupyter notebooks include:

It should be noted that all of these notebooks provide a free tier (with access to limited computational resources) as well as pro tier (with access to more powerful computational resources) that may require some upfront cost. There may be more but the above are those that I have frequently heard of.

4.3. Useful Plug-ins

Code suggestion and completion plug-ins such as the one offered by Kite provides immense support for speeding up the coding process as it helps to suggest the completion of lines of codes. This comes in handy especially when you have to sit long hours to code. A couple of seconds saved here and there may accumulate drastically over time. Such code suggestion may also double as an educational or reinforcement tool (i.e. sometimes our mind gets bogged down over long periods of coding) as it may suggest certain code blocks based on the context of the code that we have written.

5. Fundamentals of Python

In this section, we will cover the bare minimum that you need to know to get started in Python.

- Variables, Data types and Operators — This is the most important as you’ll be doing this a lot for virtually all your projects. You can think of this as sort of like the alphabets which are the building blocks that you use for spelling words. Defining and using variables allows you to store values for later usage, the various data types allows you the flexibility to make use of data (i.e. whether it be numerical or categorical data that is quantitative or qualitative). Operators will allow you to use process and filter data.

- Lists comprehensions and operations — This will be useful for pre-processing data arrays as datasets are essentially collections of numerical or categorical values.

- Loops — Loops such as

forandwhileallows us to iterate through each element in an array, list or data frames to perform the same task. At a high-level this allows us to automate the processing of data. - Conditional statements —

if,elifandelseallows the code to make decisions as to the appropriate paths to proceed with handling or processing the input data. We can use it to perform a certain task if a certain condition is met. For example, we can use it to figure out what is the data type of the input data, if it is numerical we perform processing tasks A otherwise (else) we perform processing tasks B. - Creating and using functions — Function creation helps to group similar processing tasks together in a modular manner that essentially saves your future self’s time as it allows makes your code reusable.

- File handling —Reading and writing files; creating, moving and renaming folders; setting environmental paths; navigating through the paths, etc.

- Error and exception handling — Errors is inevitable and devising the proper handling of such errors is a great way to prevent the code from stalling and not proceeding further.

6. Python Libraries for Data Science

- Data handling —

pandasis the go-to library for handling the common data formats as either 1-dimensionalSeries(i.e. can be thought of as a single column of aDataFrame) or 2-dimensionalDataFrames(i.e. common for tabular datasets). - Statistical analysis —

statsmodelsis a library that provides functions for statistical test, statistical models as well as building linear regression models. - Machine learning —

scikit-learnis the go-to library for building machine learning models. Aside from model building, the library also contains example datasets, utility functions for pre-processing datasets as well as for evaluating the model performance. - Deep learning — Popular libraries for deep learning includes TensorFlow (

tensorflow) and PyTorch (torch). Other libraries includefastaiandmxnet. - Scientific computation —

scipyis the go-to library for scientific and technical computation that encompasses integration, interpolation, optimization, linear algebra, image and signal processing, etc. - Data visualization — There are several libraries for data visualization and the most popular is

matplotlibwhich allows the generation of a wide range of plots.seabornis an alternative library that draws its functionality from matplotlib but the resulting plots are more refined and attractive.plotlyallows the generation of interactive plots - Web applications —

djangoandflaskare standard web frameworks for web development as well as for deploying machine learning models. In recent years, minimal and more lightweight alternatives have gained popularity as they are simple and quick to implement. Some of these includestreamlit,dashandpywebio.

7. Writing Beautiful Python Code

In 2001, Guido van Rossum, Barry Warsaw and Nick Coghlan released a document that establishes a set of guidelines and best practices for writing Python code called the PEP8. Particularly, PEP is an acynoym for Python Enhancement Proposal and PEP8 is just one of many documents released. The major aim of PEP 8 is to improve the readability and consistency of Python code.

As Guido van Rossum puts it:

“Code is read much more often than it is written”

Thus, writing readable code is like paying it forward because it will either be read by your future self or by others. A common situation that I often encounter is poorly documented code either by myself or by my colleagues, but luckily we have improved over the years.

8. Documenting your Code

It is always a great idea to document your code as soon as you have written it because chances are, as time passes you may have forgot some of the reasons for why you’re using a certain approach over another. I also find it helps to also include my train of thoughts that goes into certain blocks of code, which your future self may appreciate as it may very well serve as a good starting point for improving the code at a future point in time (i.e. when you’re brain is at a fresh mental state and ideas may flow better).

9. Practicing your Newfound Python Skills

Doing crossword puzzles or sudoku are good mental exercises and why not do the same for putting your Python skills to the test. Platforms such as Leetcode and HackerRank helps you learn and practice data structures and algorithms as well as prepare for technical interviews. Other similar platforms in the data science realm are Interview Query and StrataScratch.

Kaggle is a great place for aspiring data scientists to learn data science by means of participating in data competitions. Aside from this, there are ample datasets from which to practice with as well as a large collection of community published notebooks from which to draw inspirations from. If you’re keen on making it to the top as a Kaggler, there’s a Coursera course on How to Win a Data Science Competition: Learn from Top Kagglers that can help you.

10. Sharing your Knowledge

As programmers often rely on rubber duck as a teaching tool where they would attempt to explain their problems to and in doing so allows them to gain perspective and often times find a solution to those problems.

As an aspiring data scientist or a practicing data scientist learning a new tool, it is also the case that the best way to learn is to teach it to others as also stated by the Feynman Learning technique.

So how exactly can you teach? There’s actually a lot of ways as I have listed below:

- Teach a colleague — Perhaps you may host an intern for whom you can teach

- Write a Blog — Blogs are a great way to teach as there are a vast number of learners who look to blog posts to learn something new. Popular platforms for technical blogs include Medium, Dev.to and Hashnode.

- Make a YouTube video — You can make a video to explain about a concept that you’re learning about or even a practical tutorial video showing how to use a particular library, how to build a machine learning model, how to build a web app, etc.

11. Contributing to Open Source Projects

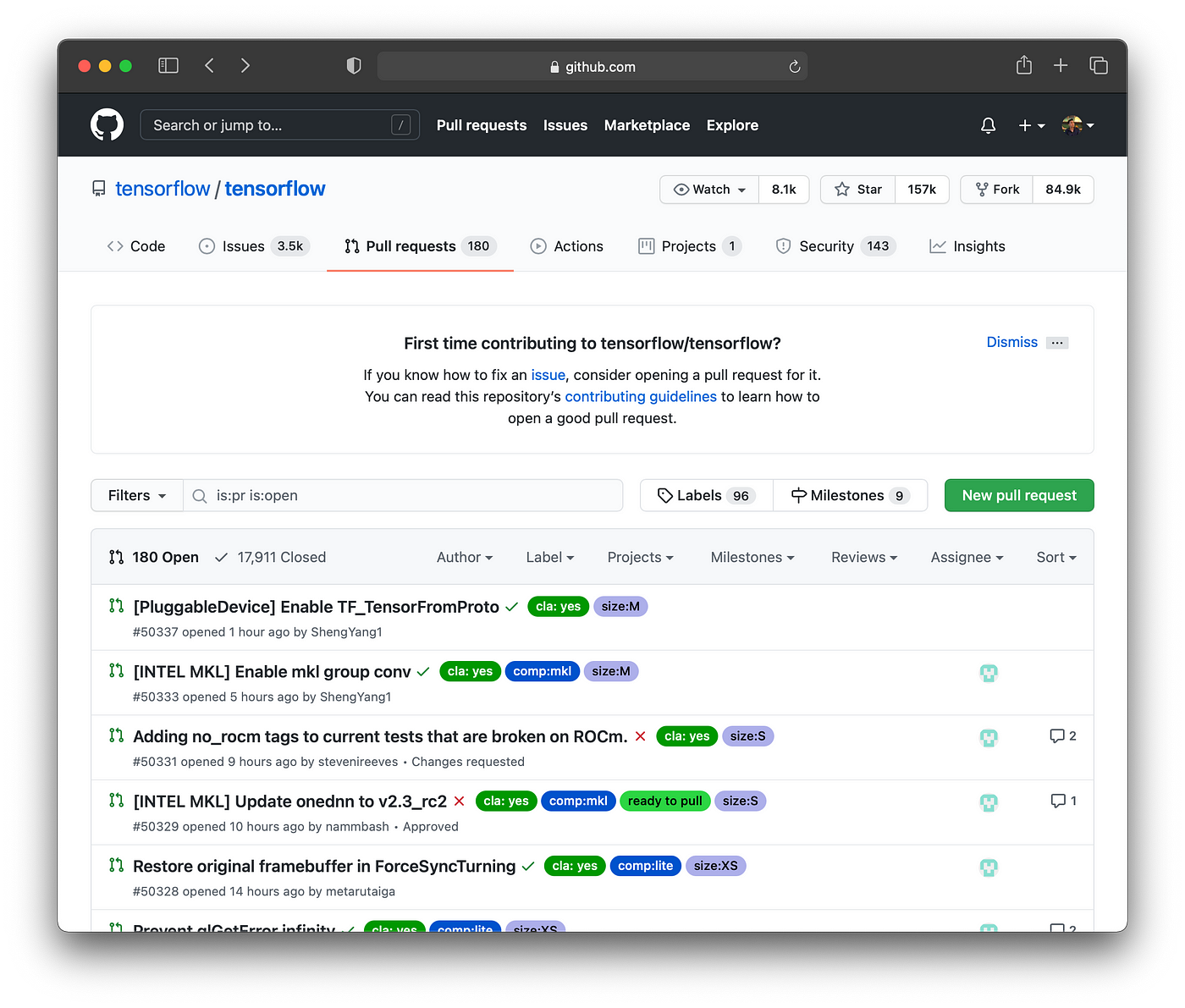

Contributing to open source projects has many benefits. Firstly, you’ll be able to learn from other experts in the field as well as learning from reviewing other people’s code (i.e. by reviewing Issues and Pull requests).

Secondly, you will also get the opportunity to be familiar with the use of Git as you contribute your code (i.e. open source projects are hosted on GitHub as well as related platforms such as BitBucket, GitLab, etc.).

Thirdly, you’ll be able to network with others in the community as you gain community and peer recognition. You will also gain more confidence in your coding abilities. Who knows, maybe you’ll even create your very own open source project!

Fourthly, you’re paying it forward as open source projects relies on developers contributing on a volunteer basis. Contributing to the greater good may also give you a sense of satisfaction that you’re making an impact to the community.

Fifthly, you’ll also gain valuable experience of contributing to a real-world project that may help you in the future in landing your own intern or job placement.

Contributing does not mean that you will have to create a big and complex enhancement to the project. You can start by correcting a small bug that you may have come across and that should get the momentum rolling.

An added benefit is that as you fix the code you may also improve your coding and documentation skills (i.e. writing readable and maintainable code). Moreover, the challenge and accountability that comes from this may also help you stay engaged in coding.

Conclusion

In summary, this article explores the landscape of Python as applied to data science. As a self-taught programmer I know how tough it may be to not only learn coding but also to apply it to solve data problems. The journey won’t be easy, but if you can persevere, you’ll be amazed at how much you can do with Python in your data science journey.

I hope that this article provided some starting points that you can use to adapt as you embark in your learning journey. Please drop a comment to suggest any point that works for you!

Disclosure

- As an Amazon Associate and affiliate member of services mentioned herein, I may earn from qualifying purchases, which goes into helping the creation of future contents.

Read These Next

- How to Build an AutoML App in Python

Step-by-Step Tutorial using the Streamlit Library - Strategies for Learning Data Science

Practical Advice for Breaking into Data Science - How to Build a Simple Portfolio Website for FREE

Step-by-step tutorial from scratch in less than 10 minutes

No comments:

Post a Comment

Thanks for your comments