Introduction:

Artificial Intelligence (AI) is an interdisciplinary field that involves the development of intelligent agents that can perform tasks that typically require human intelligence. The history of AI dates back to ancient times, where people tried to create intelligent machines. In this report, we will take a journey through the history of AI, highlighting the significant breakthroughs and milestones in the development of AI.

The Beginnings of AI:

The origins of AI can be traced back to ancient times, where Greek myths talked about mechanical men that could think and act like humans. The first recorded example of a machine that could reason was created by the mathematician and philosopher Ramon Lull in the 13th century. Lull's machine used a series of rotating discs with symbols to generate combinations of concepts, which could be used to answer questions or solve problems.

The Modern Era:

The modern era of AI began in the mid-20th century, with the development of electronic computers. In 1943, Warren McCulloch and Walter Pitts introduced the first model of an artificial neural network, which was inspired by the workings of the human brain. In 1950, Alan Turing proposed the "Turing Test," which evaluates a machine's ability to exhibit intelligent behavior that is indistinguishable from that of a human.

In the late 1950s and early 1960s, researchers began to develop systems that could reason and learn from experience. One of the earliest examples of this was the General Problem Solver (GPS), developed by Herbert Simon and Allen Newell. GPS could solve a wide range of problems by representing them as a set of rules.

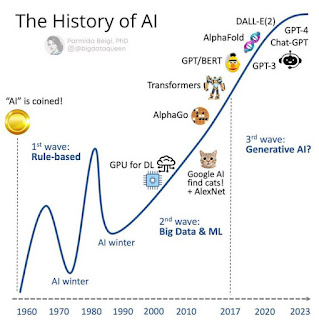

The AI Winter:

Despite these early breakthroughs, progress in AI was slow, and by the 1970s, many researchers had become disillusioned with the field. This period is known as the "AI Winter," as funding for AI research dried up, and many researchers left the field. However, a small group of researchers continued to work on AI, and in the 1980s, progress began to accelerate.

Expert Systems:

One of the breakthroughs of the 1980s was the development of expert systems, which are computer programs that can mimic the decision-making abilities of a human expert in a particular domain. Expert systems were used in a variety of applications, such as medical diagnosis, financial analysis, and engineering design.

Machine Learning:

Another breakthrough of the 1980s was the development of machine learning algorithms, which enabled computers to learn from data. The most famous of these algorithms is the backpropagation algorithm, which is used to train artificial neural networks. With machine learning, computers could begin to perform tasks that were previously thought to be too complex for machines.

The Rise of Big Data and Deep Learning:

The 21st century has seen explosive growth in the amount of data available, which has led to the development of new AI techniques, such as deep learning. Deep learning is a type of machine learning that uses neural networks with many layers to extract features from data. Deep learning has been used in a variety of applications, such as speech recognition, image recognition, and natural language processing.

Conclusion:

In conclusion, the history of AI has been characterized by breakthroughs and setbacks, but progress has been steady. The field of AI has come a long way since its beginnings in ancient Greece, and the development of electronic computers has led to significant breakthroughs in the mid-20th century. Despite the setbacks of the AI Winter, the field has continued to advance, and recent breakthroughs in deep learning have the potential to revolutionize many fields.

No comments:

Post a Comment

Thanks for your comments